1 Statistical Rethinking

TOC

Summaries

Questions

General Notes

Chapter 2

Chapter 3 Sampling Chapter 4 Linear Models, CIs, Splines

Chapter 5 Multi-Variable Linear Models, , Diagnostic Plots, Categoricals, DAGs

Chapter 6 Colliders, Multicollinearity, Post-Treatment Bias

Chapter 7 Information Theory, Prediction Metrics, Model Comparison

Chapter 8 Interactions

Chapter 9 MCMC

Chapter 10 GLM Concepts

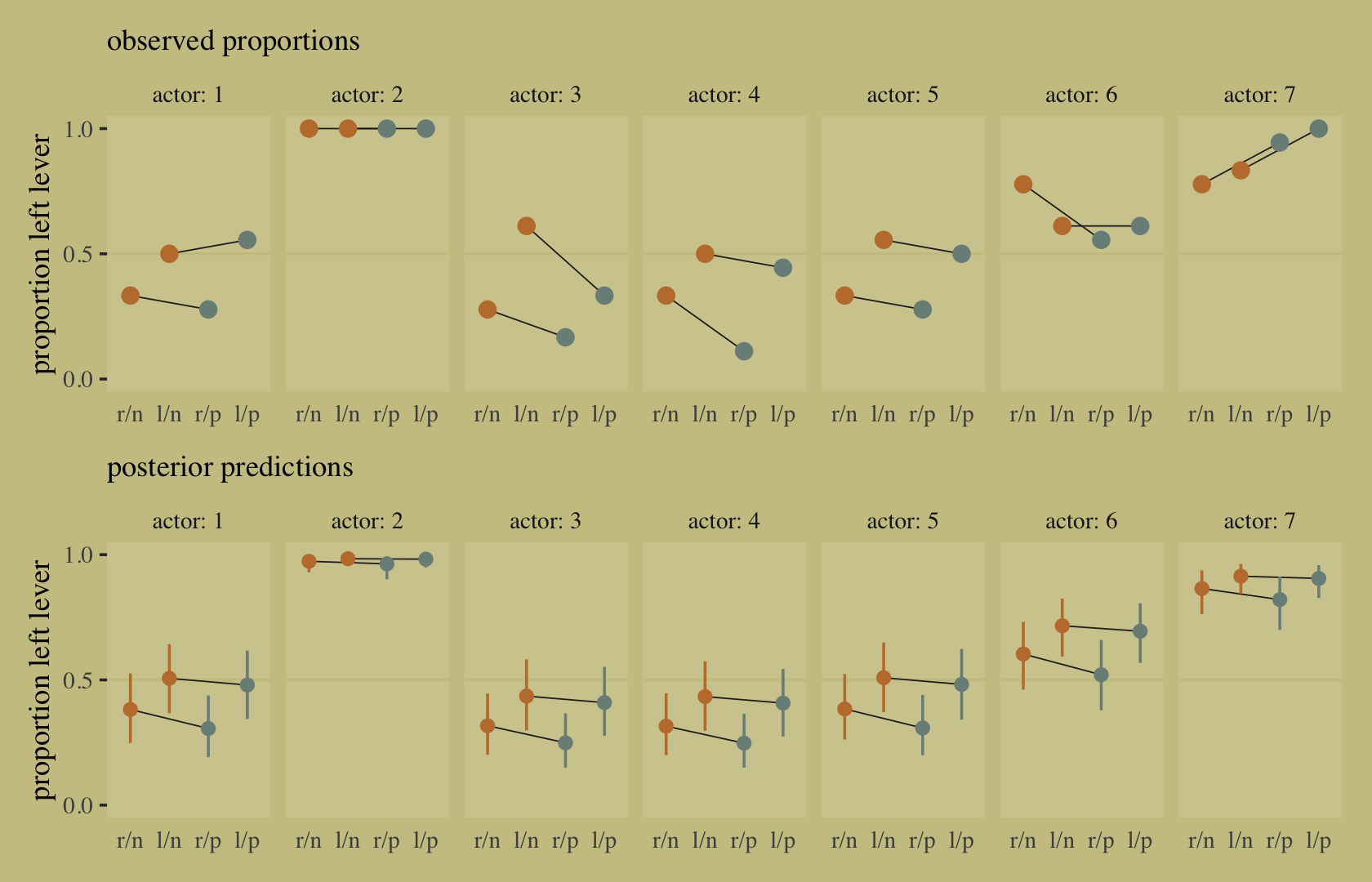

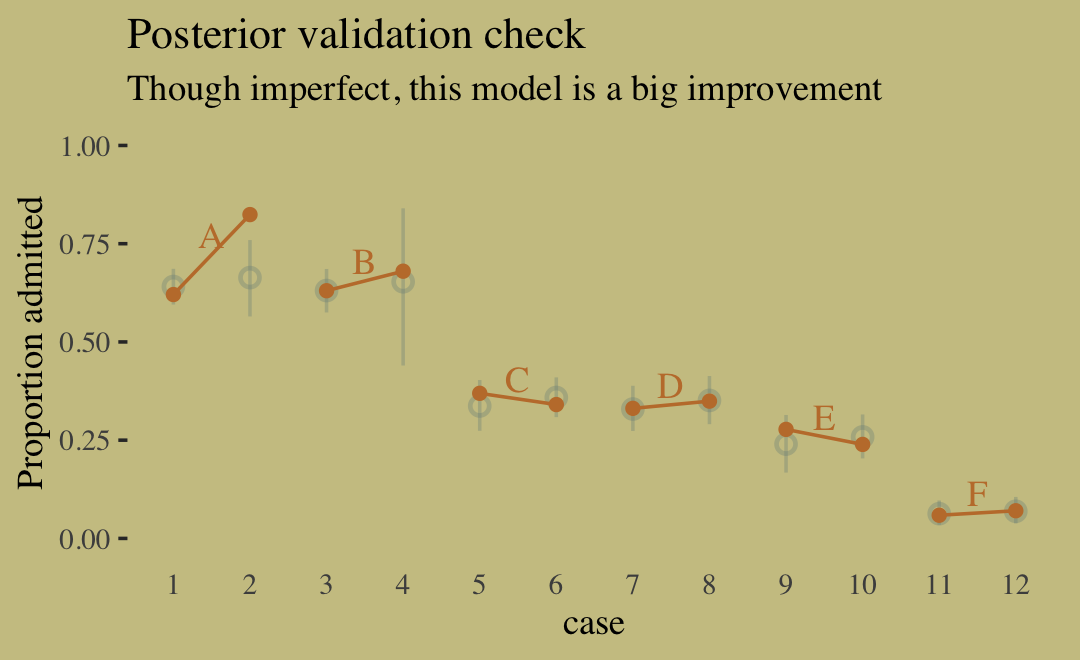

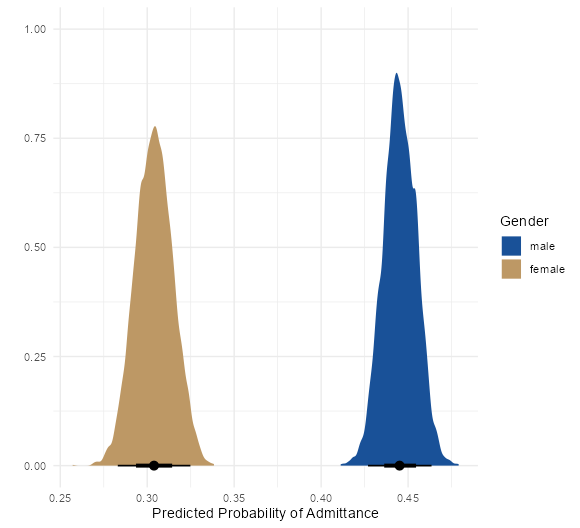

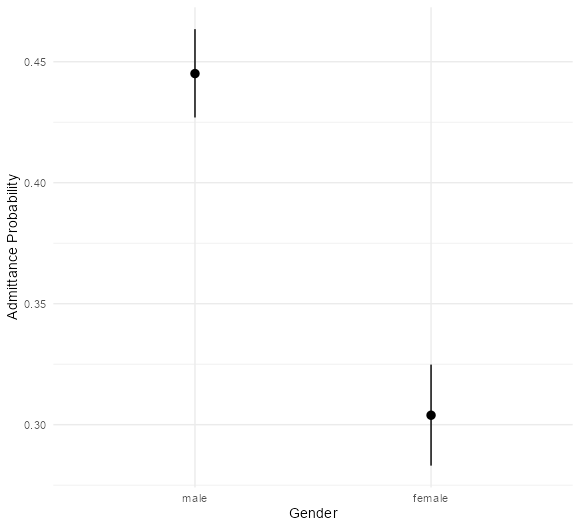

Chapter 11 Binomial and Poisson

Chapter 12

Chapter 13

Chapter 14

Chapter 15

Chapter 16

My Appendix

{brms} syntax and functions

{rethinking} functions

Stan code Examples

Summaries

- Ch 2

- Using counts

- Garden of forking paths - all the potential ways we can get our sample data (sequence of marbles drawn from a bag) given a hypothesis is true (e.g. 1 blue, 3 white marbles in a bag)

- “Conjectures” are potential outcomes (1 blue and 3 white marbles in a bag) and each conjecture is a path in the garden of forking paths

- With data, we count the number of ways (forking paths) the data is consistent with each conjecture (aka likelihood)

- With new data, old counts become a prior, and updated total path counts = prior * new path counts (i.e. prior * likelihood)

- As components of a model

- The conjecture (aka parameter value), p, with the most paths is our best guess at the truth. They’re converted to probabilities (i.e. probability of a parameter value) and now called “relative plausibilities”.

- The likelihood is a function (e.g. dbinom) that gives the probability of an observation given a parameter value (conjecture)

- Prior probabilities (or relative plausibilities) must be assigned to each unknown parameter.

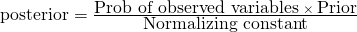

- The updated relative plausibility of a conjecture, p, is the posterior probability

- posteriorp1 = (priorp1 * likelihoodp1) / sum(all prior*likelihood products for each possible value of parameter, p)

- The denominator normalizes the updated plausability so that the sum of updated plausabilities for all the parameter values is 1 (necessary to formally be a proability density)

dbinom(x, size, prob)- probability density function for the binomial distribution- x = # of observations of the event (e.g. hitting water on the globe)

- size = sample size (N) (number of tosses)

- prob = parameter value (conjecture)(i.e. hypothesized proportion of water on the earth) (p)

- Using counts

- Ch 3 - Sampling

- Sampling from the posterior

- rbinom - random variable generator of the binomial distribution

- Summarizing the posterior - mean, median, MAP, HPDI

- posterior prediction distribution

- GOF Question: If we were to make predictions about the most probable p using this model, how consistent is our model with the data?

- Answer: if the shape of the PPD matches the shape of the sampled posterior distribution, then the model is consistent with the data. (i.e. good fit)

- GOF Question: If we were to make predictions about the most probable p using this model, how consistent is our model with the data?

- Sampling from the posterior

- Ch 4 - Linear Models

- The posterior distribution is the probability density of every combination of all the parameter values

- posterior distribution: after considering every possible combination of the parameters, it’s the assigned relative plausibilities to every combination, conditional on this model and these data. (from Ch.6)

- The posterior is the joint distribution of all combinations of the parameters at the same time, Pr(parameters|outcome, predictors)

- Many posterior distributions are approximately gaussian/multivariate gaussian

- Example: intercept model

- it’s a density of every combination of value of mean and sd

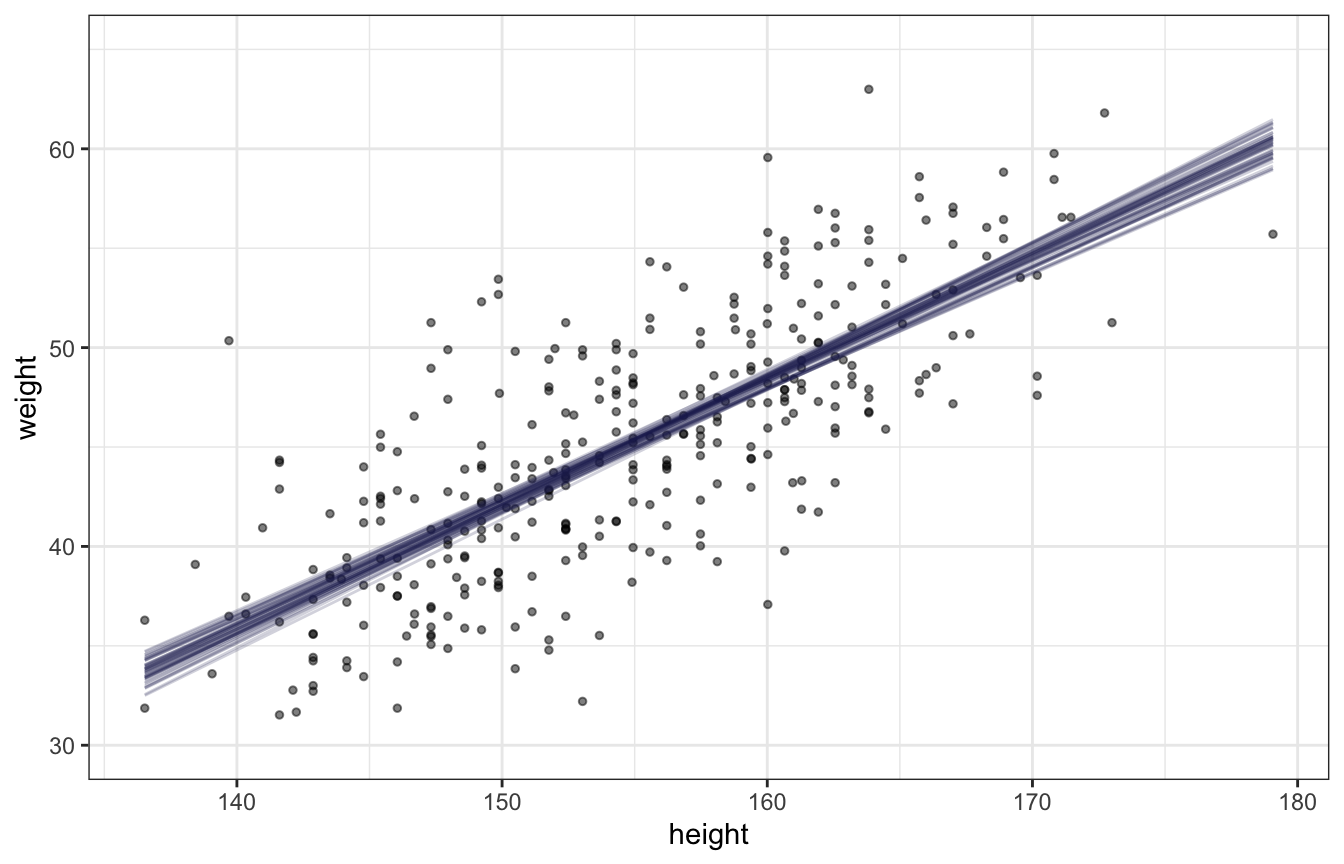

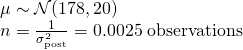

- Example: Height ~ Weight

- The posterior is Pr(α, β , σ | H, W)

- which is proportional to Normal(W|μ,σ) ⨯ Normal(α|178,100) ⨯ LogNormal(β|0,1) ⨯ Uniform(σ|0,10)

- The posterior is Pr(α, β , σ | H, W)

- posterior distribution: after considering every possible combination of the parameters, it’s the assigned relative plausibilities to every combination, conditional on this model and these data. (from Ch.6)

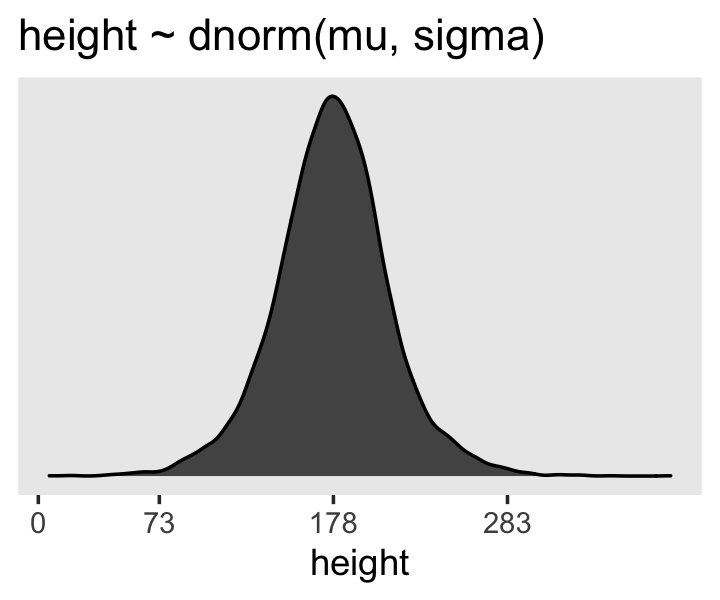

- Intro to priors

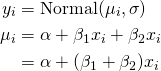

- Model Notation

- Example single variable regression where height is the response and weight the predictor

- hi ~ Normal(μ, σ) # response

- μi = α + βxi # response mean (deterministic, i.e. no longer a parameter)

- α ~ Normal(178, 100) # intercept

- β ~ Normal(0, 10) # slope

- σ ~ Uniform(0, 50) # response s.d.

- parameters are α, β, and σ

- Example single variable regression where height is the response and weight the predictor

- Centering/standardization of predictors can remove correlation between parameters

- Without this transformation, parameters and their uncertainties will co-vary within the posterior distribution

- e.g. high intercepts will often mean high slopes

- Without independent parameters

- They can’t be interpreted independently

- Effects on prediction aren’t independent

- Without this transformation, parameters and their uncertainties will co-vary within the posterior distribution

- CIs, PIs

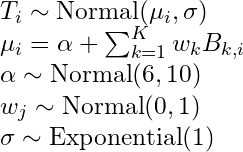

- basis splines (aka b-splines)

- The posterior distribution is the probability density of every combination of all the parameter values

- Ch 5 - Multi-Variable Linear Models

- Model Notation

- DivorceRatei ~ Normal(μi, σ)

- μi = α + β1MedianAgeMarriage_si + β2MarriageRate_si

- α ~ Normal(10, 10)

- β1 ~ Normal(0, 1)

- β2 ~ Normal(0, 1)

- σ ~ Uniform(0, 10)

- Interpretation

- DAGs

- Inferential Plots

- predictor residual, counter-factual, and posterior prediction

- masking

- correlation between predictors and opposite sign correlation of each with the outcome variable can lead increased estimated effects in a multi-regression as compared to individual bivariable regressions

- categorical variables (not ordinals)

- Using an index variable is preferred to dummy variables

- the index method allows the priors for each category to have the same uncertainty

- no intercepts used in the model specifications

- Contrasts

- Using an index variable is preferred to dummy variables

- Model Notation

- Ch 6 - Colliders, Multicollinearity, Post-Treatment Bias

- multicollinearity

- Consequences

- the posterior distribution will seem to suggest that none of the collinear variables is reliably associated with the outcome, even if all of the collinear variables are in reality strongly associated with the outcome.

- The posterior distributions of the parameter estimates will have very large spreads (std.devs)

- i.e. parameter mean estimates shrink and their std.devs inflate as compared to the bivariate regression results.

- predictions won’t be biased but interpretation of effects will be impossible

- the posterior distribution will seem to suggest that none of the collinear variables is reliably associated with the outcome, even if all of the collinear variables are in reality strongly associated with the outcome.

- solutions

- Think causally about what links the collinear variables and regress using that variable instead of the collinear ones

- Use data reduction methods

- Consequences

- post-treatment bias

- mistaken inferences arising from including variables that are consequences of other variables

- i.e. the values of the variable are a result after treatment has been applied

- e.g. using presence of fungus as a predictor even though it’s value is determined after the anti-fungus treatment has been applied

- Consequence: it can mask or unmask treatment effects depending the causal model (DAG)

- mistaken inferences arising from including variables that are consequences of other variables

- collider bias

- When you condition on a collider, it induces statistical—but not necessarily causal— associations.

- Consequence:

- The statistical correlations/associations are present in the data and may mislead us into thinking they are causal.

- Although, the variables involved may be useful for predictive models as the backdoor paths do provide valid information about statistical associations within the data.

- Depending on the causal model, these induced effects can be inflated

- The statistical correlations/associations are present in the data and may mislead us into thinking they are causal.

- A more complicated demonstration of Simpson’s Paradox (see My Appendix)

- Applications of Backdoor Criterion

- See Causal Inference note

- Recipe

- List all of the paths connecting X (the potential cause of interest) and Y (the outcome).

- Classify each path by whether it is open or closed. A path is open unless it contains a collider.

- Classify each path by whether it is a backdoor path. A backdoor path has an arrow entering X.

- If there are any backdoor paths that are also open, decide which variable(s) to condition on to close it.

- multicollinearity

- Ch 7 - Information Theory, Prediction Metrics, Model Comparison

- Regularizing prior (type of skeptical prior)

- Typicall priors with smaller sd values

- Flat priors result in a posterior that encodes as much of the training sample as possible. (i.e. overfitting)

- When tuned properly, reduces overfitting while still allowing the model to learn the regular features of a sample.

- Too skeptical (i.e. sd too small) results in underfitting

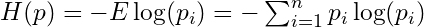

- Information Entropy

- The uncertainty contained in a probability distribution is the average log-probability of an event.

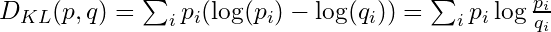

- Kullback-Leibler Divergence (K-L Divergence)

- The additional uncertainty induced by using probabilities from one distribution to describe another distribution.

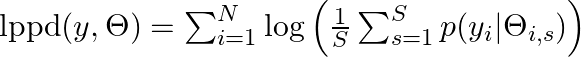

- Log Pointwise Predictive Density (lppd)

- Sum of the log average probabilities

- larger is better

- The log average probability is an approximation of information entropy

- Sum of the log average probabilities

- Deviance

- -2*lppd

- smaller is better

- An approximation for the K-L divergence

- -2*lppd

- Predictive accuracy metrics Can’t use any information criteria prediction metrics to compare models with different likelihood functions

- Pareto-Smoothed Importance Sampling Cross-Validation (PSIS)

- Estimates out-of-sample LOO-CV lppd

- loo pkg

- “elpd_loo” - larger is better

- “looic” - is just (-2 * elpd_loo) to convert it to the deviance scale, therefore smaller is better

- Rethinking pkg: smaller is better

- loo pkg

- Weights observations based on influence on the posterior

- Uses highly influential observations to formulate a pareto distribution and sample from it(?)

- Estimates out-of-sample LOO-CV lppd

- Widely Applicable Information Criterion (WAIC)

- Deviance with a penalty term based on the variance of the outcome variable’s observation-level log-probabilities from the posterior

- Estimates out-of-sample deviance

- loo pkg:

- “elpd_waic”: larger is better

- “waic”: is just (-2 * elpd_waic) to convert it to deviance scale, therefore smaller is better

- Rethinking pkg: smaller is better

- loo pkg:

- Bayes Factor

- The ratio (or difference when logged) of the average likelihoods (the denominator of bayes theorem) of two models.

- Pareto-Smoothed Importance Sampling Cross-Validation (PSIS)

- Model Comparison

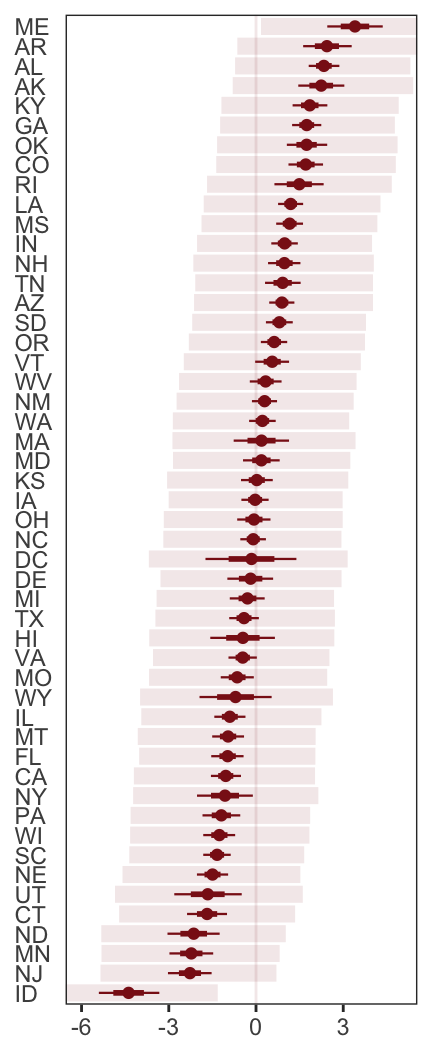

- To judge whether two models are “easy to distinguish” (i.e. kinda like whether their scores are statistically different), we look at the differences between the model with the best WAIC and the WAICs of the other models along with the standard error of the difference of the WAIC scores

- Leave-one-out cross-validation (LOO-CV)

- Has serious issues, I think (see Vehtari paper for recommendations, (haven’t read it yet))

- Outliers

- Detection - High p_waic (WAIC) and k (PSIS) values can indicate outliers

- Solutions

- Mixture Model

- Robust Regression using t-distribution for outcome variable. As shape parameter, v, approaches 1+, tails become thicker.

- Regularizing prior (type of skeptical prior)

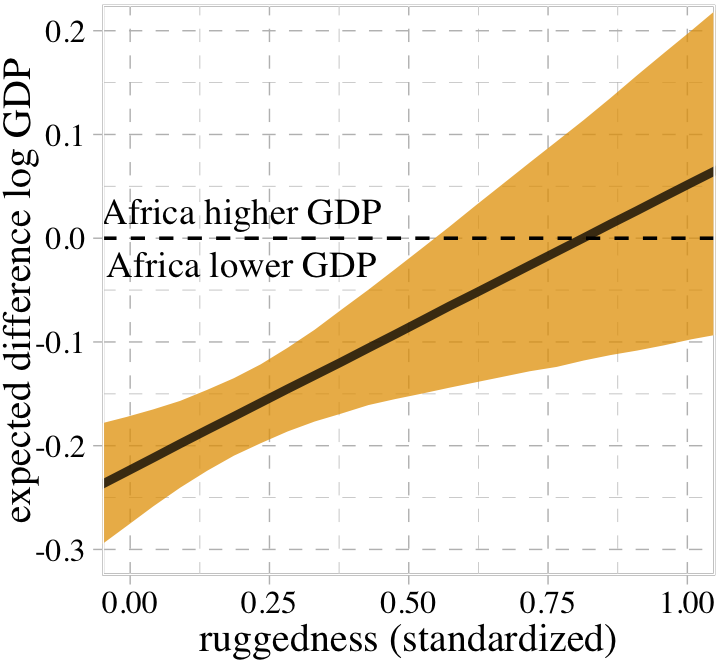

- Ch 8 - Interactions

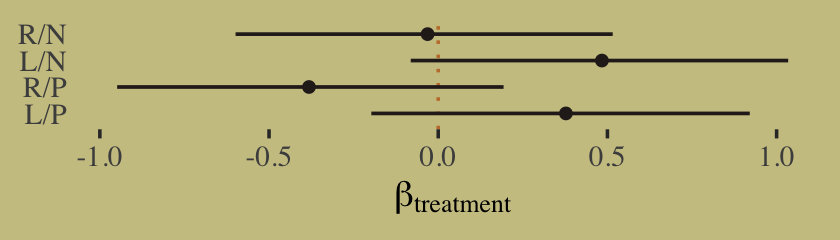

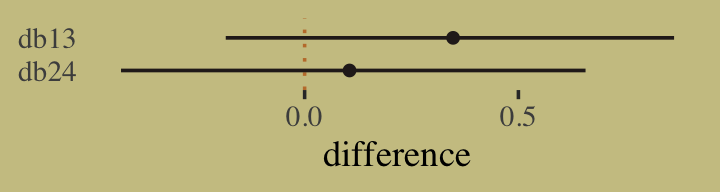

- continuous:categorical interaction

- Coded similarly to coding categoricals (index method)

- continuous:continuous interaction

- Coded very similar to the traditional R formula

- Interaction prior is the same as the variables used in the interaction

- Plots

- A counterfactual plot can be used to show the reverse of the typical interaction interpretation (i.e. association of continuous predictor conditioned on the categorical)

- Triptych plots are a type of facetted predictor (one of the interaction variables) vs fitted graph where you facet by bins, quantiles, levels of the other interaction variable

- continuous:categorical interaction

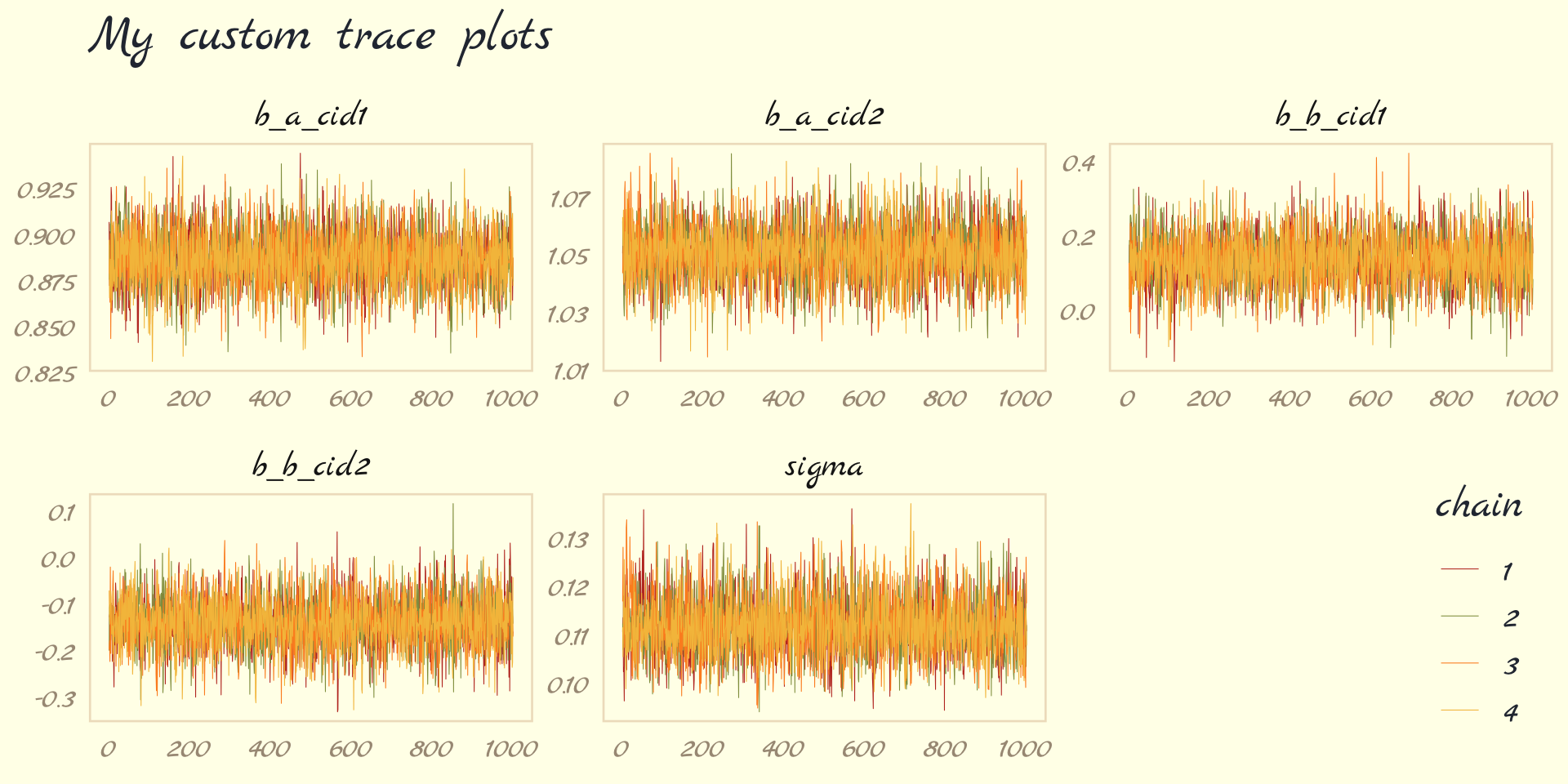

- Ch 9 - MCMC

- Gibbs

- Optimizes sampling the joint posterior density by using conjugate priors

- Inefficient for complex models

- Can’t discern bad chains as well as HMC

- Hamiltonian Monte Carlo (HMC)

- Uses Hamiltonian differential equations in a particle physics simulation to sample the joint posterior density

- Momentum and direction are randomly chosen

- Hyperparameters

- Used to reduce autocorrelation of the sampling (sampling is sequential) (U-Turn Problem)

- Determined during warm-up in HMC

- Stan uses NUTS2

- Leapfrog steps (L) - paths between sampled posterior value combinations are made up of leapfrog steps

- Step Size (ε) - The length of a leapfrog step is the step size

- Uses Hamiltonian differential equations in a particle physics simulation to sample the joint posterior density

- Diagnostics

- Effective Sample Size (ESS) - Measures the amount by which autocorrelation in samples increases uncertainty (standard errors) relative to an independent sample

- Bulk_ESS - effective sample size around the bulk of the posterior (i.e. around the mean or median)

- When value is much lower than the actual number of iterations (minus warmup) of your chains, it means the chain is inefficient, but possibly still okay

- Tail_ESS - effective sample size in the tails of the posterior

- No idea what’s good here.

- Bulk_ESS - effective sample size around the bulk of the posterior (i.e. around the mean or median)

- Rhat (Gelman-Rubin convergence diagnostic) - estimate of the convergence of Markov chains to the target distribution

- If converges, Rhat = 1+

- If value is above 1.00, it usually indicates that the chain has not yet converged, and probably you shouldn’t trust the samples.

- Early versions of this diagnostic can fail for more complex models (i.e. bad chains even when value = 1)

- Trace Plots

- Multi-line plot depicting the sampling of parameter values in the joint posterior

- lazy, fat caterpillars = good chains

- Not recommended since 1 pathological chain can remain hidden in the plot

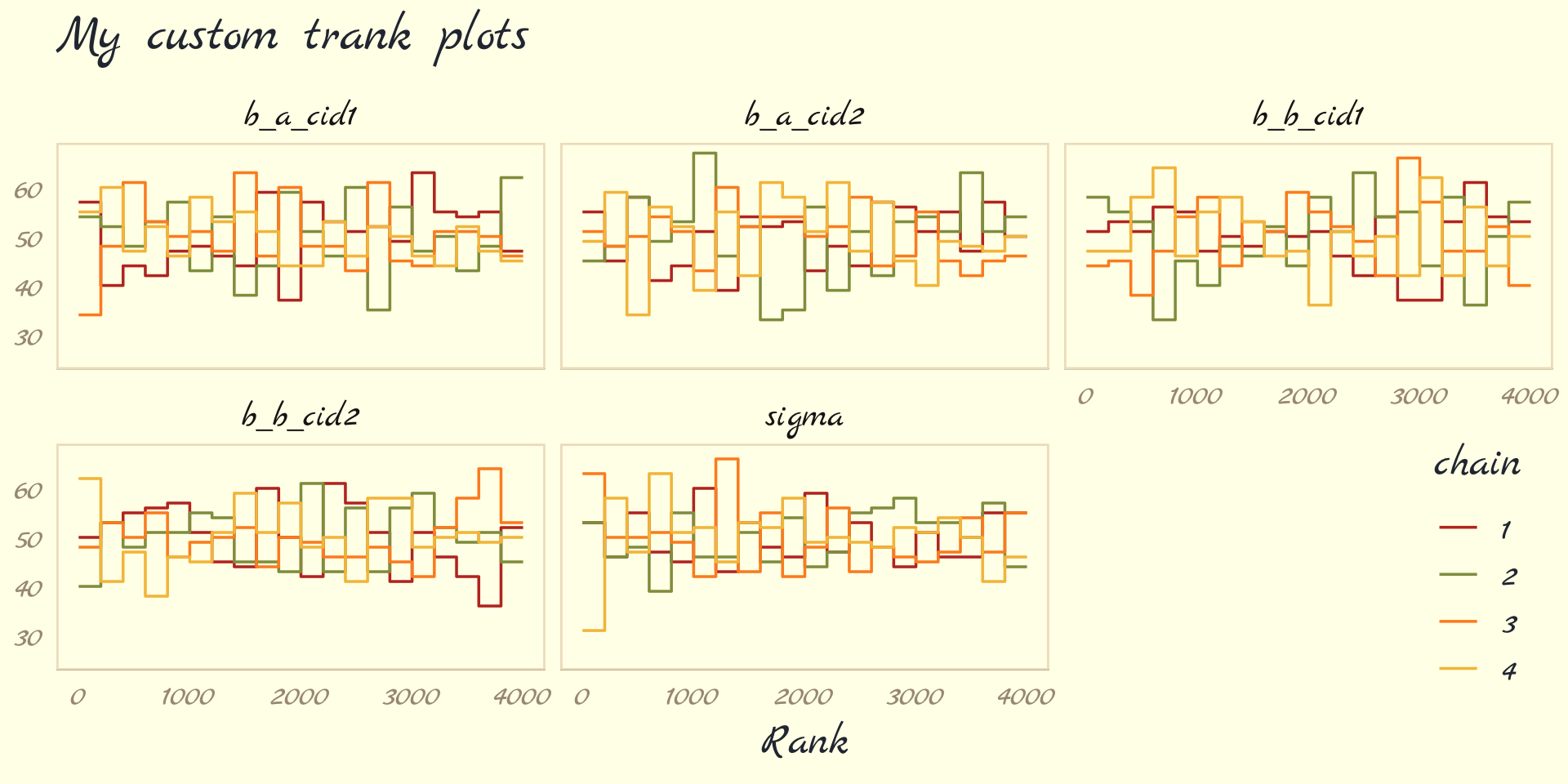

- Trank plots

- A layered histogram method that is easier to discern each chain’s health than using trace plots

- Effective Sample Size (ESS) - Measures the amount by which autocorrelation in samples increases uncertainty (standard errors) relative to an independent sample

- Set-up

- Warm-up samples

- More complex models require more warm-up

- Start will default and adjust based on ESS values

- Post-Warmup samples

- 200 for mean estimates using not-too-complex regression models

- Much moar required for

- Complex models

- Finer resolution of the tails

- Non-Gaussian distributions

- Chains

- debugging: 1

- Some stan errors only display when 1 chain is used

- Validation of chains: 3 or 4

- Final Run: only need 1 but can use more depending on compute power/# of vCPUs

- debugging: 1

- Warm-up samples

- Problems with ugly chains in trace/trank plots

- Solutions for the 2 Examples were to use weakly informative priors ¯\_(ツ)_/¯

- Gibbs

- Ch 10 - GLM Concepts

- The principle of maximum entropy provides an empirically successful way to choose likelihood functions. Information entropy is essentially a measure of the number of ways a distribution can arise, according to stated assumptions. By choosing the distribution with the biggest information entropy, we thereby choose a distribution that obeys the constraints on outcome variables, without importing additional assumptions. Generalized linear models arise naturally from this approach, as extensions of the linear models in previous chapters.

- The maximum entropy distribution is the one with the greatest information entropy (i.e. log number of ways per event) and is the most plausible distribution.

- No guarantee that this is the best probability distribution for the real problem you are analyzing. But there is a guarantee that no other distribution more conservatively reflects your assumptions.

- maximum entropy also provides a way to generate a more informative prior that embodies the background information, while assuming as little else as possible.

- Omitted variable bias can have worse effects with GLMs

- Gaussian

- A perfectly uniform distribution would have infinite variance, in fact. So the variance constraint is actually a severe constraint, forcing the high-probability portion of the distribution to a small area around the mean.

- The Gaussian distribution gets its shape by being as spread out as possible for a distribution with fixed variance.

- The Gaussian distribution is the most conservative distribution for a continuous outcome variable with finite variance.

- The mean µ doesn’t matter here, because entropy doesn’t depend upon location, just shape.

- Binomial

- Binomial distribution has the largest entropy of any distribution that satisfies these constraints:

- only two unordered events (i.e. dichotomous)

- constant expected value (i.e. exp_val = sum(prob*num_events))

- If only two un-ordered outcomes are possible and you think the process generating them is invariant in time—so that the expected value remains constant at each combination of predictor values— then the distribution that is most conservative is the binomial.

- Binomial distribution has the largest entropy of any distribution that satisfies these constraints:

- Other distributions

- Ch 11

- Logistic Regression models a 0/1 outcome and data is at the case level

- Example: Acceptance, A; Gender, G; Department, D Ai ~ Bernoulli(pi) logit(pi) = α[Gi, Di]

- Binomial Regression models the counts of a Bernoulli variable that have been aggregated by some group variable(s)

- Example: Acceptance counts that have been aggregated by department and gender Ai ~ Binomial(Ni, pi) logit(pi) = α[Gi, Di]

- Results are the same no matter whether you choose to fit a logistic regression with case-level data or aggregate the case-level data into counts and fit a binomial regression

- brms models

- Logistic

- family = bernouilli

- formula: outcome_var|trials(1)

- Binomial:

- family = binomial

- formula

- balanced: outcome_var|trials(group_n)

- unbalanced: outcome_var|trials(vector_with_n_for_each_group)

- Logistic

- rstanarm models specified just like using glm

- Flat Normal priors for logistic or binomial do NOT have high sds. High sds say that outcome event probability is always close to 0 or close 1.

- For flat intercept: sd = 1.5

- For flat slope: sd = 1.0

- See Bayes, priors for details on other options

- Effects

- Types

- Absolute Effects - The effect of a (counter-factual) change in predictor value (type of treatment) has on the outcome (probability of an event)

- Contrast of the predicted values (e.g. marginal means)

- Relative Effects - The effect of a (counter-factual) change in predictor value (type of treatment) has on the outcome (odds of an event)

- Absolute Effects - The effect of a (counter-factual) change in predictor value (type of treatment) has on the outcome (probability of an event)

- Types

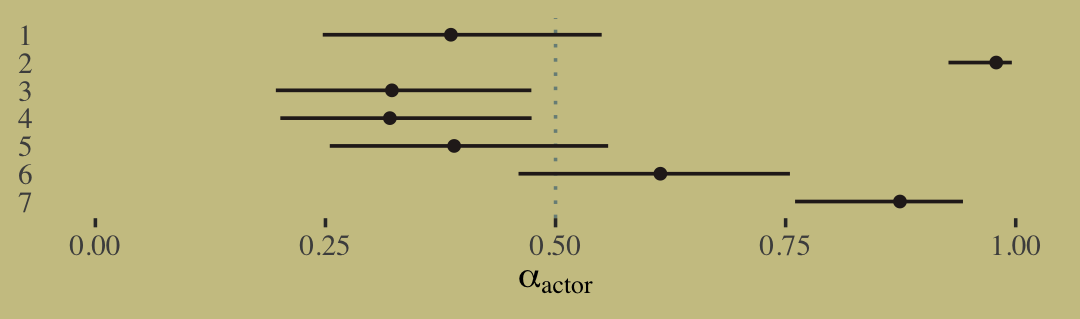

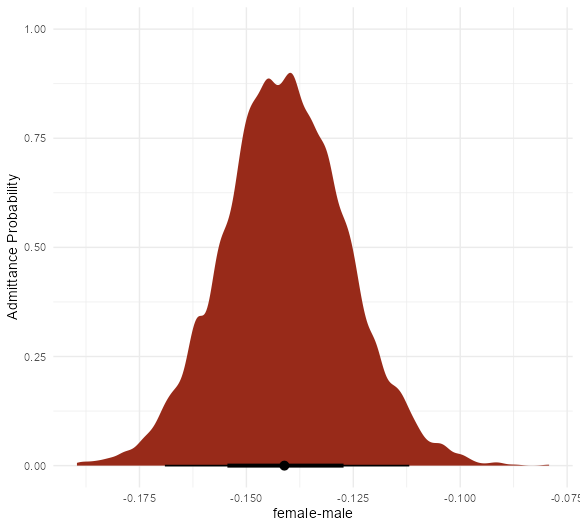

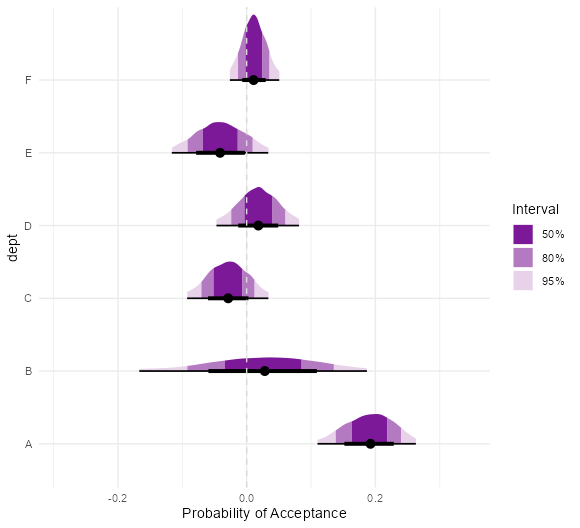

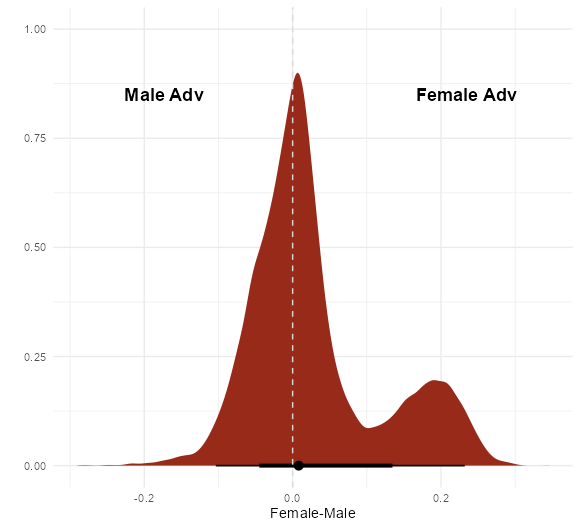

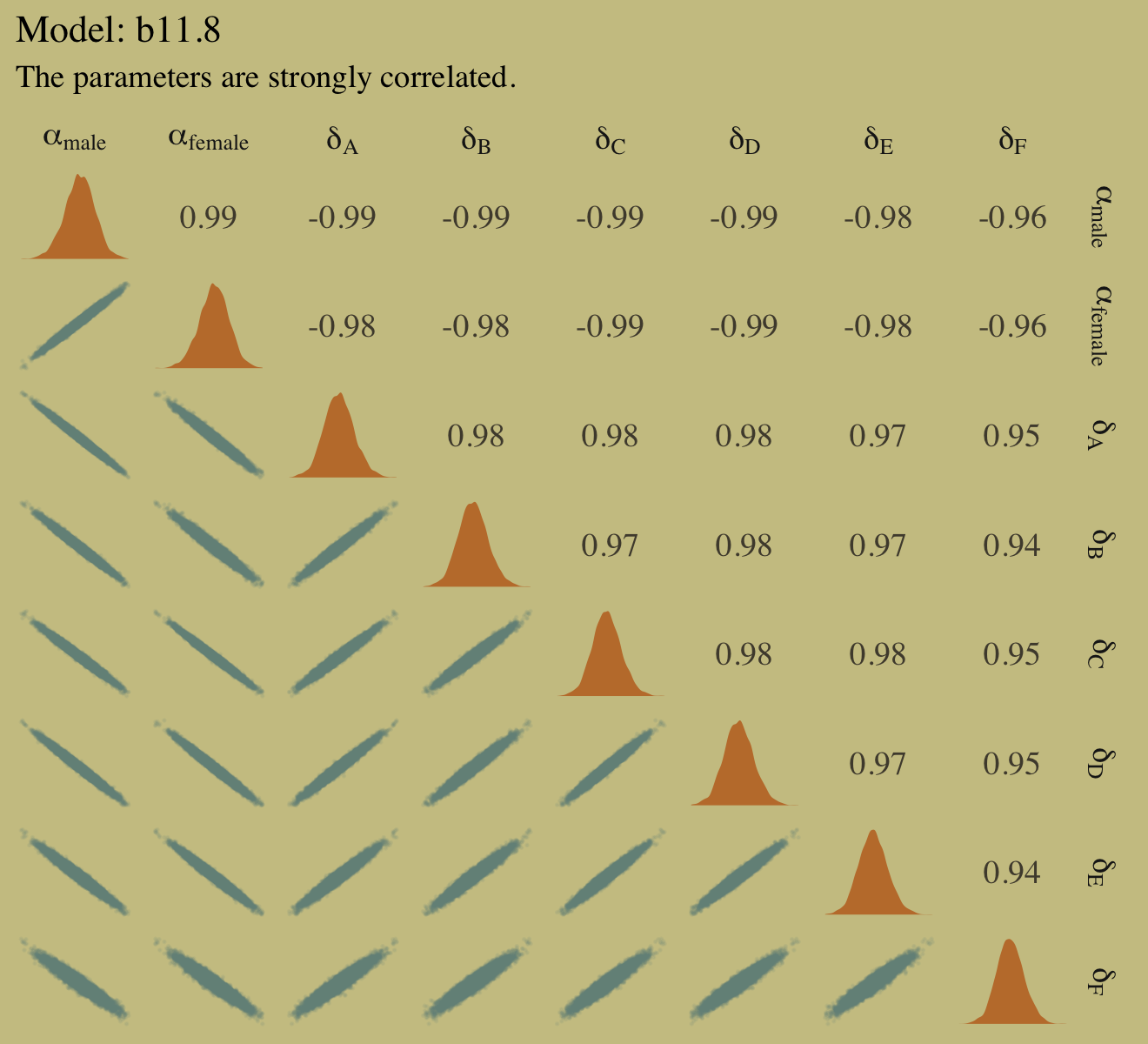

- UC Berkeley gender discrimination analysis

- Typical pipe DAG for many social science analyses where unobserved confounders are often an issue

- Also see Causal Inference >>

- Misc >> Partial Identification

- Mediation Analysis

- Poisson - when N is very large and probability of an event, p, is very small, then expected value and variance are approximately the same. The shape of this distribution is the Poisson

- Flat Normal priors for Poisson also do NOT have high sds

- Not sure if these are standard flat priors, but the priors in the Example were

- Intercept sd = 0.5

- Slope sd = 0.2

- Not sure if these are standard flat priors, but the priors in the Example were

- Logistic Regression models a 0/1 outcome and data is at the case level

Questions

- Ch 2

- In Ch2, there are couple times where McElreath is talking about differences between Bayesian and Frequentist approaches in the terms of the subjective choices that are made by each. For Bayesians, it’s the choice of prior, and for Frequentists, it’s the choice of “estimator.” Does anybody know exactly what he’s means when he says “estimator?” My intuition is that he’s talking about something like MLE, but I don’t see how that would be a subjective choice in the same vein as the choice of prior would be.

- “choice of estimator” keeps being referred to — what exactly is he talking about?

- Sample size and reliable inference (pg 31)

- “A Bayesian golem must choose an initial plausibility, and a non-Bayesian golem must choose an estimator.”

- prior, prior, pants of fire (pg 36)

- non-Bayesian procedures need to make choices that Bayesian ones do not, such as choice of estimator or likelihood penalty

- Sample size and reliable inference (pg 31)

- Ch 3

- Posterior Predictive Distribution

- weighted frequency average blah blah blah isn’t completely clear to me, so how is this calculation actually done

- pr = prob of a param value (or conjecture) from sampled posterior density

- W = total count for a value of W for all the trials for given param value

- cn = number of conjectures

- wn = number of possible count of W for each trial

- weighted frequency average for W = 4 is [(prc1 * W4c1) + (prc2 * W4c2) + …. + (prcn * W4cn)] / cn

- these are counts unlike the posterior density

- therefore maybe the shape is dependent on “size”?

- weighted frequency average blah blah blah isn’t completely clear to me, so how is this calculation actually done

- Thought: likelihood is the probability of a conjecture given the data then the denominator “standardizes” that probability in terms of the other likelihoods. This standardized probability is the proportion of the updated posterior probability density that is attributable to this conjecture.

- actually it would need to be the whole thing (i.e. the whole quantity calc’d in bayes theorem) but I think that’s what it is. And these must all be additive, or maybe not additive but the integral from p = whatever to p = whatever

- enjoyed the fig. looking at in reverse helped me think about the posterior density and bayes equation. The standardizing of the likelihoods for a particular parameter value is the proportion it attributes to the density.

- Posterior Predictive Distribution

- Ch 4

- Thoughts

- The likelihood, prior, and posterior densities are probability densities each with an area = 1. Looking at the marble tables it looks like the individual posterior probabilities sum to 1. So, the sum (we’re talking densities so probably this “sum” = integration actually) of all the products of the multiiplication of the prior and likelihood densities must not have an area = 1. Therefore, the denominator (i.e. sum of products) then standardizes these products so the posterior density does have an area of 1.

- Would this make the posterior a joint density of parameter combinations (aka conjectures)? It does (pg 96)

- ?

- extracting samples from the posterior: “it uses the variance-covariance matrix and coefficients to define a multivariate Gaussian posterior to draw n samples from.”

- how does this work?

- bottom page 101

- can’t find the code

- how does this work?

- extracting samples from the posterior: “it uses the variance-covariance matrix and coefficients to define a multivariate Gaussian posterior to draw n samples from.”

- Thoughts

- Ch 7

- dgp

yi ∼ Normal(µi , 1) µi = (0.15)x1,i − (0.4)x2,i

The first model, with 1 free parameter to estimate, is just a linear regression with an unknown mean and fixed σ = 1. Each parameter added to the model adds a predictor variable and its beta-coefficient. Since the “true” model has non-zero coefficients for only the first two predictors, we can say that the true model has 3 parameters

- Doesn’t makes sense when he says WAIC and CV are trying to predict different things.

- although their equations for lppd are slightly different. BUT they’re both lppd. So is the difference just a typo?

- His interpretation of WAIC having better accuracy of out-of-sample deviance doesn’t make sense. We have no true deviance value. I mean if its an estimate of the K-L divergence, does that mean the true deviance value is supposed to zero at 3 parameters.

- “WAIC is unsurprisingly a little better at predicting out-of-sample deviance, because that is what it aims to predict. Cross-validation (CV) is a trick that estimates out-of-sample deviance as the sample size increases, but it doesn’t aim for the right target at small sample sizes. And since PSIS approximates CV, it has the same issue.”

- “CV and PSIS have higher variance as estimators of the K-L Divergence, and so we should expect WAIC to be better in many cases.”

- dgp

- Ch 8

- pg 246 First he scales the predictor by dividing by the max, because 0 is meaningful. Then he centers it by subtracting the mean from it, because he wants to be able to define the prior more easily.

- I haven’t thought much about this but what’s the interpretation here? Is it in sd units still even though it’s scaled by the max?

- doubtful

- Maybe Gelman’s book has something

- Instead of scaling a centered value (standardization), he centered a scaled value. So the answer is how does a centered value get interpreted?

- Does the order of operations matter here?

- Yes. Depends when the mean is taken. Is it taken on the scaled value or the original scale value

- for a unit increase above the mean value, the outcome variable increases by

amount. The intercept is the value of the outcome when the predictor is held at its mean. - The mean value is some value between 0 and 1 where 1 is the maximum

- Whats a unit in this case? 0.1?

- Does the order of operations matter here?

- I haven’t thought much about this but what’s the interpretation here? Is it in sd units still even though it’s scaled by the max?

- 8.3.2 pg 259

- He sets intercept prior to have range that has 5% of the mass outside the possible range of the outcome variable. Why have a potential for values outside the range at all?

- He says, “the goals here are to assign weakly informative priors to discourage overfitting”

- How does this match up with “flat priors encourage overfitting?” Is a conservative/skeptical prior considered a weakly informative prior?

- Does centering or scaling affect these prior values, I think so (because thats why we do it). Therefore may negative values are possible? 0 is no longer the base in a standardized variable. It’s the mean.

- pg 246 First he scales the predictor by dividing by the max, because 0 is meaningful. Then he centers it by subtracting the mean from it, because he wants to be able to define the prior more easily.

- Ch 9

- pg 288 Talks about having more than 2000 effective samples and them being anti-correlated. Plus Stan uses an adaptive sampler. Guess this nut2, but he uses “adaptive” which makes me think of the gibb sampler. Whole paragraph is confusing to me.

- Maybe correlation has something to do with the “effective” part and whatever anti-correlation is makes this number go beyond 2000 samples (the default setting that was used)

- pg 288 Talks about having more than 2000 effective samples and them being anti-correlated. Plus Stan uses an adaptive sampler. Guess this nut2, but he uses “adaptive” which makes me think of the gibb sampler. Whole paragraph is confusing to me.

- Ch 10

- pg 312 “This reveals that the distribution that maximizes entropy is also the distribution that minimizes the information distance from the prior, among distributions consistent with the constraints”.

- Doesn’t make sense to me by looking at the equation, since the larger the log difference (ie distance) between pi and qi, larger the entropy (greater positive value).

- Think I might be misunderstanding “the distribution that minimizes the information distance from the prior”. Maybe that’s not referring to the log distance.

- redo log diff calcs to make sure

- solution: The key is “consistent with constraints.” If you just choose a couple far-away pi vs pi that are close to q, then the reverse of the statement is true. But if you have a constraint, such as pis must sum to 1, then his statement is true.

- pg 312 “This reveals that the distribution that maximizes entropy is also the distribution that minimizes the information distance from the prior, among distributions consistent with the constraints”.

- Ch 11

- pg 352 He shows how Department could be a collider and therefore conditioning on it would open a backdoor path. But NOT conditioning on Department allows the backdoor fork path to remain open. What the solution? Find another variable that somehow disrupts the fork?

General Notes

- Workflows

- 8.3.2 pg 258

- scale and/or center outcome and predictors so that priors are more easily specified

- Reason through the values of the priors for each parameter according the range of values of the predictor and outcome

- fit models

- plot posterior parameters

- plot prior predictive simulations

- 8.3.2 pg 258

- Differences between Bayesian and Frequentist

- Also see

- Parameter estimation

- Bayesian Updating - Count the number of ways a parameter value can result in seeing the data sample. The parameter value that can happen the greatest number of ways is the parameter estimate. With new data, the count is updated and a new parameter estimate is chosen.

- Domain knowledge (prior formulation) can be used to help the model learn

- With posteriors, it is easy to visualize the most plausible values for the parameters and quantify any uncertainty in our estimates.

- prior-to-posterior cycle provides a principled way to update the parameters if additional observations become available.

- Frequentism - Whole model must be re-fitted

- Bayesian Updating - Count the number of ways a parameter value can result in seeing the data sample. The parameter value that can happen the greatest number of ways is the parameter estimate. With new data, the count is updated and a new parameter estimate is chosen.

- Sample Size

- Important choices for small sample sizes that effect the GOF:

- Bayesian choose a prior distribution of the parameter of interest.

- Frequentists choose an estimator

- Any rules you’ve been taught about minimum sample sizes for inference are just non-Bayesian superstitions. If you get the prior back, then the data aren’t enough.

- Bayesian updating produces valid estimates at any sample size

- Frequentism - e.g. around n = 30 for Guassian distribution, etc.

- Bayesian inference is agnostic to any pre-specified sample size and is not really affected by how frequently you look at the data along the way (see article linked in Sample Size >> Bayesian)

- A bayesian power analysis to calculate a desired sample size entails using the posterior distribution probability threshold (or another criteria such as the variance of the posterior distribution or the length of the 95% credible interval)

- Important choices for small sample sizes that effect the GOF:

- Point Estimates

- Bayesian - The parameter distribution is the estimate.

- Summaries of the distribution (mean, mode, etc.) can be calculated from that distribution, but the whole distribution is what is reported and used for any subsequent calculations.

- Frequentist - Point estimates are calculated - usually the mean. (median would probably require a different model)

- Bayesian - The parameter distribution is the estimate.

- Confidence Intervals

- Bayesian - the alpha used is chosen according to the use-case. 95% CIs aren’t relevant for Bayesian inference because significance testing (e.g. p-values) on the effect size isn’t done in Bayes. CIs are used as part of the summary of the distribution.

- Frequentist - t-tests then p-values are calculated to determine whether 0 is contained in the interval.

- For a Bayesian, there is no choice of estimator.(?) It’s always the posterior distribution.

- I think this refers to the optimization procedure like least squares or negloglikelihood or maybe numerical methods of optimizing the parameters. While a Bayesian only uses the Bayes equation to solve for the posterior distribution.

- Frequentists make inferences about parameters directly through a sampling distribution but for Bayesians the posterior distribution is not sampled but deduced logically and then samples are taken from the posterior to aid in inference.

- Uncertainty

- Frequentist: probabilities are tied to countable events and their frequencies in very large samples. Measurement uncertainty is therefore based on imaginary resamplings where the measurement values eventually would reveal a pattern. The distribution of these imaginary measurements (e.g. means, model estimates, etc) is called the sampling distribution. Frequentist uncertainty is about the randomness in a measurement.

- Bayesian: Uncertainty is a property of having incomplete information. It’s not an inherent property of the real world.

- Hypothesis vs Data

- Frequentist: The hypothesis or state of the world is fixed and data are variable. Meaning a hypothesis is either true or false and the data you collected is a sample. This sample is one of many different potential samples you could’ve collected from the population (distribution).

- Bayesian: The hypothesis is variable and the data is fixed. There are many different hypotheses, and the question is which one is most plausible given this data. The data isn’t thought of as a portion of the all the data. It’s what does this data say about the plausibility of all the potential hypotheses.

- From Post-Hoc Analysis note

- Frequentist null hypothesis significance testing (NHST) determines the probability of the data given a null hypothesis (i.e. P(data|H), yielding results that are often unwieldy, phrased as the probability of rejecting the null if it is true (hence all that talk of “null worlds” earlier). In contrast, Bayesian analysis determines the probability of a hypothesis given the data (i.e.P(H|data)), resulting in probabilities that are directly interpretable.

- Simulation of predictions

- Bayesians models are always generative (i.e. capable of simulating predictions, aka dummy data)

- Some Frequentist models are generative and others are not.

- Many frequentist models have bayesian counterparts and vice versa

- The Bayesian interpretation of a non-Bayesian procedure recasts assumptions in terms of information, and this can be very useful for understanding why a procedure works.

- A Bayesian model can be embodied in a more efficient, but approximate, non-Bayesian procedure. Bayesian inference means approximating the posterior distribution. It does not specify how that approximation is done.

- Similarities between Bayesian and Frequentist

- Dependence on the likelihood function and the assumptions involved in selecting this function.

- Various Bayes’ Theorem formulations

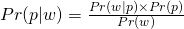

(chapter 2)

(chapter 2)

where

where

- Pr(w)( also referred to as a Marginal Probability) averages the likelihood over the prior. The integral is how you calculate an average over a continuous distribution of values (e.g. all possible prior values). Pr(w)’s job is to standardize the posterior (i.e. values between 0 and 1)

- This says the probability of the parameter given the data is equal to the likelihood x the prior (which is standardized by the average likelihood to make it a probability).

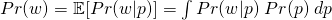

Using the model definition for human heights Example (pg 83):

- for height, hi ~ Normal(μ, σ), μ ~ Normal(178, 20), σ ~ Uniform(0,50)

- In the numerator, the likelihood for each height x priors are multiplied together to get a joint likelihood across all the data

- The denominator is the likelihood averaged over the priors in order to standardize the probability.

- Notation:

y - data (outcome and predictors)

θ - parameters

p(y, θ) - joint probability distribution of the data and parameters

- What STAN is fitting in the background. Fits all the parameters all at once.

p(θ) - prior probability distribution - the probability of the parameters before any data are observed

p(θ | y) - posterior probability distribution - the probability of the parameters conditional on the data (i.e. after seeing the data)

p(y | θ)

- If y is fixed, this is the likelihood function (when you’re fitting your model)

- If θ is fixed, this is the sampling distribution (when your model is fitted and the parameters are estimated)

- Used to make predictions on unseen data

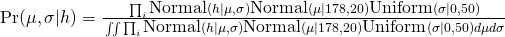

When describing the likelihood for a Binomial Distribution, these two notations are equivalent,

, where W is the variable of interest (e.g. count of heads after n coin flips with a p probability of heads) and n and p are the parameters of the Binomial distribution.

, where W is the variable of interest (e.g. count of heads after n coin flips with a p probability of heads) and n and p are the parameters of the Binomial distribution.p( ) or f( ) denotes probability densities (continuous distributions)

Pr( ) denotes probability masses (discrete distributions)

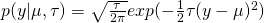

Bayesians often write the Gaussian probability density as :

- where

(aka the precision)

(aka the precision)

- where

Model Definitions

- Example: Globe tossing

- w ~ Binomial (n, p)

- p ~ Uniform (0, 1)

- “The count w is distributed binomially with sample size n and probability p. The prior for p is assumed to be uniform between 0 and 1.”

- A Uniform prior is often called a “flat prior.”

- Example single variable regression where height is the outcome and weight the predictor

- hi ~ Normal(μ, σ)

- μi = α + βxi

- α ~ Normal(178, 100)

- β ~ Normal(0, 10)

- σ ~ Uniform(0, 50)

- hi is the likelihood and ui is the linear model (now deterministic, not a parameter). The rest of the definitions are for priors.

- Multivariate notation with Divorce Rate as the outcome and state median age of marriage and state marriage rate as predictors

- Divorcei ~ Normal(μi, σ)

- μi = α + β1MedianAgeMarriage_si + β2Marriage_si

- α ~ Normal(10, 10)

- β1 ~ Normal(0, 1)

- β2 ~ Normal(0, 1)

- σ ~ Uniform(0, 10)

- The s signifies the variable has been standardized.

- General Multilevel Model: yi ~ D( f (ηi), θ)

- η represents any linear predictor, α + βxi

- f represents any inverse link function

- Example: Globe tossing

- Misc

- The demarcation between science and not-science is not falsifying a null hypothesis. All null hypotheses are false. There is never nothing happening. There’s always an effect. It’s just whether we have enough data to be able to measure it. It’s about falsifying a research hypothesis.

- BUGS (Bayesian models using Gibbs sampling) - project in the 1980s to get Bayesian models on desktop computers.

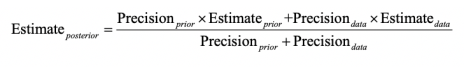

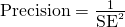

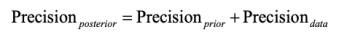

- From a Stephen Senn post on meta-analysis, standard errors, and point estimates:

and

and

1.1 Chapter 2

- 1 blue marble is drawn from the bag and replaced. The bag is shaken, and a white marble is drawn and replaced. Finally, the bag is shaken, and a blue marble is drawn and replaced

.png)

- each ring is a iid observation (bag shaken and a marble drawn and replaced)

- In this Example, the “garden of forking paths” is set of all potential draws (consisting of 3 observations), given the conjecture of there being 1 blue and 3 white marbles in the bag

.png)

- If we actually do draw a marble, record the observation, replace the marble, repeat 2 more times, and the result is blue, white, blue, then these are the number of paths in each conjecture’s garden that are consistent with that outcome

- For conjecture 1 blue, 3 white, 1, 3, 1 is the number of paths in each ring, respectively, that remain consistent with the sequence of recorded observations.

- When multiplied together, the product equals the total consistent paths.

.png)

- After the bag is shaken, a new marble is drawn (new data) — it’s blue. Previous counts are now the prior.

- The ways this new blue marble can be drawn, given a conjecture, is used to update each prior count through multiplication.

- This is equivalent to starting over and drawing another marble after the previous 3 iid observations.

- The plausibility of a conjecture, p1, is the prior plausibility given p1 * new count given p1 and then that product is standardized into a probability so that it is comparable.

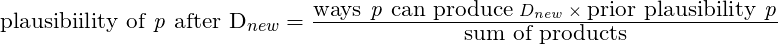

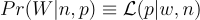

- plausibilityp1 = (new_countsp1 *prior_plausibilityp1) / sum of all the (prior*new) products of the other conjectures

- It’s the probability of the conjecture given the new data

- The plausibility of a conjecture, p, after seeing new evidence, Dnew, is proportional to the ways the conjecture, p, can produce the new evidence, Dnew, times the prior plausibility of the conjecture, p.

- Equivalents:

plausibility of p after Dnew

ways p can produce Dnew x prior plausibility of p

ways p can produce Dnew x prior plausibility of p

- sum of products = sum of the WAYS of each conjecture. For Conjecture 0 blues = 0 ways, Conjecture 1 blue = 3 ways (see above), Conjecture 2 blues = 8 ways, Conjecture 3 blues = 9 ways, Conjecture of 4 blues = 0. Therefore sum of products = 20.

- if the prior plausibility of p for conjecture of 1 blue marble = 1 (and the rest of the conjectures, i.e. flat prior), then plausibility of conjecture 1 blue = (3*1)/20 = 0.15. The plausibility is a way to normalize the counts to be between 0 and 1.

.png)

- Equivalents:

- A conjectured proportion of blue marbles, p, is usually called a parameter value. It’s just a way of indexing possible explanations of the data

- Here p, proportion of surface water, is the unknown parameter, but the conjecture could also be other things like sample size, treatment effect, group variation, etc.

- There can also be multiple unknown parameters for the likelihood to consider.

- Every parameter must have a corresponding prior probability assigned to it.

- The relative number of ways that a value p can produce the data is usually called a likelihood.

- It is derived by the enumerating all the possible data sequences that could have happened and then eliminating those sequences inconsistent with the data (i.e. paths_consistent_with_data / total_paths).

- As a model component, the likelihood is a function that gives the probability of an observation given a parameter value (conjecture)

- “how likely your sample data is out of all sample data of the same length?”

- Example (the proportion of water to land on the earth):

- W is distributed Binomially with N trials and a probability of p for W in each trial,

- “The count of ‘water’ observations (finger landing on water), W, is distributed binomially, with probability p of ‘water’ on each toss of a globe and N tosses in total.”

- L(p | W, N) is another likelihood notation

- Assumptions:

- Observations are independent of each other

- The probability of observation of W (water) is the same for every observation

dbinom(x, size, prob)- x = # of observations of water (W)

- size = sample size (N) (number of tosses)

- prob = parameter value (conjecture)(i.e. hypothesized proportion of water on the earth) (p)

- W is distributed Binomially with N trials and a probability of p for W in each trial,

- The prior plausibility of any specific p is usually called the prior probability.

- A distribution initial plausibilities for every value of a parameter

- Expresses prior knowledge about a parameter and constrains estimates to reasonable ranges

- Unless there’s already strong evidence for using a particular prior, multiple priors should be tried to see how sensitive the estimates are to the choice of a prior

- Example where the prior is a probability distribution for the parameter:

- p is distributed Uniformly between 0 and 1, (i.e. each conjecture is equally likely)

- p is distributed Uniformly between 0 and 1, (i.e. each conjecture is equally likely)

- Weakly Informative or Regularizing priors: conservative; guards against inferences of strong association

- mathematically equivalent to penalized likelihood

- The new, updated relative plausibility of a specific p is called the posterior probability.

- The set of estimates, aka relative plausibilities of different parameter values, aka posterior probabilities, conditional on the data — is known as the posterior distribution or posterior density (e.g. Pr(p | N, W)).

- Thoughts

- The likelihood, prior, and posterior densities are probability densities each with an area = 1. Looking at the marble tables it looks like the individual posterior probabilities sum to 1. So, the sum (we’re talking densities so this “sum” = integration actually) of all the products of the multiiplication of the prior and likelihood densities must not have an area = 1. Therefore, the denominator (i.e. sum of products) then standardizes each of these products so the posterior density does have an area of 1.

- Numerical Solvers for the posterior distribution:

- Grid Approximation - compute the posterior distribution from only a portion of potential values (the grid of parameter values) for a set of unknown parameters

- Doesn’t scale well as the number of parameters grows

- Steps:

- Decide how many values you want to use in your grid (e.g.

seq( from = 0, to = 1, len = 1000))- number of parameter values in your grid is equal to the number of points in your posterior distribution

- Compute the prior value for each parameter value in your grid (e.g.

rep(1, 1000), uniform prior) - Compute the likelihood (e.g. using

dbinom(x, size, p = grid))for each grid value - Multiply the likelihood times the prior which is the unstandardized posterior

- Standardize that posterior by dividing by

sum(unstd_posterior)

- Decide how many values you want to use in your grid (e.g.

- Quadratic approximation - the posterior distribution can be represented by the Gaussian distribution quite well. The log of a Gaussian (posterior) distribution is quadratic.

- Steps:

- Find the mode of the posterior. Uses quadratic approximation. With a uniform prior this is equivalent to MLE

- Estimate the curvature of the posterior using another numerical method

- Needs larger sample sizes. How large is model dependent.

- Rethinking pkg function,

quap( )- inputs are likelihood function (e.g. dbinom) and prior function (e.g. punif), and data for the likelihood function

- outputs mean posterior probability and the std dev of the posterior distribution

- Steps:

- MCMC only briefly mentioned

- Grid Approximation - compute the posterior distribution from only a portion of potential values (the grid of parameter values) for a set of unknown parameters

1.2 Chapter 3 - Sampling

- Sampling from the posterior distribution means we can work with counts (easier, more intuitive) instead of working with the density which is working with integrals. Plus MCMC results are count based.

- Sampling from the posterior density:

samples <- sample(parameter values vector, prob = posterior density from output of model, size = # of samples you want, replace = T)- parameter values are the conjectures,

- posterior is their likelihood x prior,

- Grid Approximation for 1 parameter:

- Sampling from the posterior density:

p_grid <- seq( from=0, to=1, length.out=1000) # parameter values vector

prior <- rep(1,1000) # repeat 1 a thousand times to create a flat prior

likelihood <- dbinom(3, size = 3, prob=p_grid) # plausibility of 3 events out of 3 observations for each each conjectured parameter value

posterior_unstd <- likelihood * prior

posterior <- posterior_unstd / sum(posterior_unstd)

# sampling the posterior density

samples <- sample(p_grid, prob = posterior, size = 10000, replace = T)size is how many samples we’re taking

(See stat.rethinking brms recode bkmk for ggplot graphs and dplyr code)

Common questions to ask about the posterior distribution

- How much of the posterior probability lies below some parameter value?

- Example: Whats the posterior probability that the proportion of water on the globe is below 0.50?

- Answer:

sum(samples < 0.50) / total_samples(= 1e4, see above)- “/total sample” is the procedure if there are 1 or more parameters represented in the posterior density

- How much of the posterior probability is between two parameter values?

- Example: The posterior probability that the proportion of water is between 0.50 and 0.75?

- Answer:

sum(samples > 0.50 & samples < 0.75) / total_samples

- **

sum()of posterior samples gives probability for a specified parameter value ** - **

quantile()of posterior samples gives the parameter value for a specified probability ** - Which parameter value marks the lower 5% of the posterior probability?

- i.e. which proportion of water has a 5% probability?

- Answer:

quantile(samples, 0.05)

- Which range of parameter values contains the middle 80% of the posterior probability?

- Defined as the Percentile Interval (PI)

- Frequentist CIs are normally percentile intervals, just of the sampling distribution instead of the posterior

- Fine in practice for the most part, but bad for highly skewed posterior distributions as it’s not guaranteed to contain the most probable value.. In such cases use HPDI (see below).

- If you do have an a posterior that’s highly skewed, make sure to also report the entire distribution.

- Defined as the Percentile Interval (PI)

- Which range of proportions of water is the true value likely to be in with a 80% probability?

- Answer:

quantile(samples, c( 0.10, 0.90))

- Answer:

- Which parameter value has the highest posterior probability?

- Answer: the MAP (see below)

- How much of the posterior probability lies below some parameter value?

Credible Interval - General Bayesian term that is interchangeable with confidence interval. Instead an interval of probability density or mass, it’s based on an interval of the posterior probability. If choice of interval (percentile or hdpi) affects inferences being made, then also report entire posterior distribution.

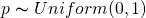

Highest Posterior Density Interval (HPDI) - The narrowest interval containing the specified probability mass. Guaranteed to have the value with the highest posterior probability.

Left: 50% percentile interval assigns equal mass (25%) to both the left and right tail. As a result, it omits the most probable parameter value, p = 1.

Right: 50% HPDI finds the narrowest region with 50% of the posterior probability. Such a region always includes the most probable parameter value.

Disadvantages:

- Expensive

- Suffers from Simulation Variance (i.e. sensitive to number of samples).

- Less familiar to most audiences

See Ch 4. uncertainty section for brms code

Maximum a posteriori (MAP) - value with the highest posterior probability, aka mode of the posterior.

The choice of type of point estimate (MAP, median, mean) should depend on a loss function (e.g. L1, squared –> median, L2 aka quadratic –> mean, etc).

- If the loss is asymmetrical, e.g. cost rises exponentially as loss increases, then I think he’s saying that’s a good time to use MAP.

Dummy Data are simulated data (aka fake data) to take the place of real data.

- Uses

- Checking model fit and model behavior

- Checking the effectiveness of a research design (e.g. power analysis)

- Forecasting

dbinom(0:2, size = 2 , prob = 0.70)gives likelihoods for observing 0Ws, 1W, 2Ws for a trial with 2 flips given conjecture, p = 0.70rbinom(10, size = 2, prob = 0.70)is generating 10 data points given the conjecture (aka parameter value), p = 70%- 10 experiments (or trials) of flipping the globe twice

- output being how many Ws were observed in each experiment

- the output are dummy observations

- rbinom means its a binomial distribution, so only two possible outcomes in each trial

- size = 2 means there are 2 flips per trial and means there are to be either 2 events, 1 event, or 0 events.

- In the globe flipping Example where the event is landing on Water: 2 Water - 0 Land, 1 W - 1 L, 0 W - 2 L

- prob = 0.70 is probability (plausibility of an event) (e.g. landing on Water)

table(rbinom(100000, 2, 0.70) / 100000)is very similar to the output of dbinom above.- Increasing the size from 2 to 9 yields a distribution where the mode is around the true proportion (which would be 0.70 * 9 = 6.3 Ws)

.png)

- The sampling distribution is still wide though, so the majority of experiments don’t result in the around 6 Ws.

- Represents the “garden of forking paths” from Ch. 2

- 10 experiments (or trials) of flipping the globe twice

- Uses

Posterior Predictive Distribution (PPD)

- The PPD is the (simulated) distribution of outcome values we expect to see in the wild given this model and these data.

- Equivalent to computing all the sampling distributions for each p and then averaging (by using the posterior distribution) them together (or integrating over the posterior density). This propagates the uncertainty about p of the posterior distribution into the PPD.

.png)

- (Intuitive) Steps in creating the PPD for the globe Example

- A probability of hitting water (aka parameter value) is sampled from the posterior density (top pic)

- More likely parameter values get sampled more often

- That sampled probability is associated with a count distribution (aka sampling distribution histogram) (middle pic) where the x-axis values are possible total counts of the event after each trial.

- Each p’s sampling distribution is the distribution we’d expect to see if that p was true. (e.g. bell curve for p = 0.50)

- e.g.

rbinom(10, size = 9, prob = 0.70)where the sampled probability is 0.70, trials = 10, and 9 is the number of globe tosses per trial- Therefore, there’s a maximum of 9 events (i.e. hitting the water) possible per trial

- This count distribution (middle pic) gets sampled, and that sampled count is tallied in the PPD (bottom pic)

- e.g. if a 6 is sampled from the count distribution, it’s tallied in the PPD for the “6” on the x-axis

- Repeat (e.g. 1e4 times)

- A probability of hitting water (aka parameter value) is sampled from the posterior density (top pic)

- Computing a PPD:

rbinom(number of trials, size = number of flips per trial, prob = samples from posterior density)- these are counts unlike the posterior density

- therefore maybe the shape is dependent on “size”

- the posterior distribution is used as weights to calculate a weighted, average frequency of W observations for each trial.

- Example:

rbinom(10000, 9, samples)- For trial 1, a coin is flipped 9 times.

- The total number of heads for each trial is determined by sampling the posterior density

- Repeat another 999 times

- A vector is returned where each value is the total number of heads for that trial (e.g. length in Example = 10000)

- For trial 1, a coin is flipped 9 times.

- Example: PPD for tossing the globe

p_grid <- seq( from=0, to=1, length.out=1000) # parameter values vector

prior <- rep(1,1000) # repeat 1 a thousand times to create a flat prior

likelihood <- dbinom(6, size = 9, prob=p_grid) # plausibility of 3 events out of 3 observations for each each conjectured parameter value

posterior_unstd <- likelihood * prior

posterior <- posterior_unstd / sum(posterior_unstd)

samples <- sample(p_grid, prob = posterior, size = 1e4, replace = TRUE) # sample from posterior

ppd <- rbinom(10000, size = 9, prob = samples) # simulate observations to get the PPD

hist(ppd)- Example: brms way

b3.1 <-

brm(data = list(w = 6),

family = binomial(link = "identity"),

w | trials(9) ~ 0 + Intercept,

# this is a flat prior

prior(beta(1, 1), class = b, lb = 0, ub = 1),

iter = 5000, warmup = 1000,

seed = 3,

file = "fits/b03.01")

# sampling the posterior

f <-

fitted(b3.1,

summary = F, # says we want simulated draws and not summary stats

scale = "linear") %>% # linear outputs probabilities

as_tibble() %>%

set_names("p")

# posterior probability density (top pic)

f %>%

ggplot(aes(x = p)) +

geom_density(fill = "grey50", color = "grey50") +

annotate(geom = "text", x = .08, y = 2.5,

label = "Posterior probability") +

scale_x_continuous("probability of water",

breaks = c(0, .5, 1),

limits = 0:1) +

scale_y_continuous(NULL, breaks = NULL) +

theme(panel.grid = element_blank())

# ppd

f <-

f %>%

mutate(w = rbinom(n(), size = n_trials, prob = p))

# ppd histogram (bottom pic)

f %>%

ggplot(aes(x = w)) +

geom_histogram(binwidth = 1, center = 0,

color = "grey92", size = 1/10) +

scale_x_continuous("number of water samples",

breaks = seq(from = 0, to = 9, by = 3)) +

scale_y_continuous(NULL, breaks = NULL, limits = c(0, 5000)) +

ggtitle("Posterior predictive distribution") +

coord_cartesian(xlim = c(0, 9)) +

theme(panel.grid = element_blank())Same process is used for a Prior Predictive Distribution

- The prior distribution takes the place of the posterior density in this case

For a continuous distribution, see Ch 4 >> Prior Predictive Distribution Example

Model fit

- 2 types of uncertainty:

- predicted observation uncertainty

- even if we did know the correct conjecture, p, we wouldn’t know with certainty the outcome of the next trial, unless the correct p = 0 or p = 1.

- See histogram in previous section

- Even if the “true” conjecture (e.g. p = 0.70) is known, there is uncertainty in the count of W observations the next trial will yield.

- Sounds like this is quantified by predictive intervals (PI) given some α

- uncertainty about p

- the posterior distribution over p embodies this uncertainty.

- And this is quantified by credible intervals (CI) given some α

- predicted observation uncertainty

- GOF Question: If we were to make predictions about the most probable p using this model, how consistent is our model with the data?

- Answer: if the shape of the PPD matches the shape of the sampled posterior distribution, then the model is consistent with the data. (i.e. good fit)

- 2 types of uncertainty:

1.3 Chapter 4 - Linear Models, CIs, Splines

- Gaussian Distribution

- Good toy Example that explains the shape of a Normal distribution

- 1000 people stand at the 50 yd line and flip a coin 16 times (doesn’t seem like a lot). Tails move one step left, heads move one step right. The shape of the people after the 16 flips will be a bell curve. If you picture people zigging left and right after flips, there are more possible sequences of flips that end with the person back where they started at the 50 yd line than say, for Example, at the 30 yd line (either goal). Therefore, ending up at the 50 yd line is most probable.

- Reminds me of how there is only one way for your ear bud wires not to be tangled and many, many more ways for them to be tangled.

- 1000 people stand at the 50 yd line and flip a coin 16 times (doesn’t seem like a lot). Tails move one step left, heads move one step right. The shape of the people after the 16 flips will be a bell curve. If you picture people zigging left and right after flips, there are more possible sequences of flips that end with the person back where they started at the 50 yd line than say, for Example, at the 30 yd line (either goal). Therefore, ending up at the 50 yd line is most probable.

- Additive effects make convergence to a Gaussian inevitable - Each flip can be thought of as a fluctuation from the average of the distribution with large positive fluctuations cancelling out large negative fluctuations. The more samples taken (flips), the more opportunities for fluctuations of varying sizes and sign to be paired with a fluctuation of equal size and opposite sign , resulting in a cancellation and a final sum of zero. Therefore, normality emerges as sample sizes get larger. The speed at which the sampling distribution converges to normality depends on the underlying data generating distribution.

- Small multiplicative effects converge to a Gaussian - if the effect of interaction is a small percent (e.g. between 0 and 10% increase/decrease) then the resulting product of fluctuations converges to a Gaussian distribution as well. Small multiplicative effects can be well approximated additively.

- The log of large multiplicative effects converge to a Gaussian - if the effect of interactions is a large percent (e.g. 10-50% increase/decrease), then the product of the fluctuations are Gaussian on the log-scale (Log-Normal).

- Good toy Example that explains the shape of a Normal distribution

- See General Notes -> Notation: for the notation and syntax used in model specification

- It’s a good idea to plot your priors to get a sense of the assumptions in your model.

- Example:

curve(dunif( x, 0, 50), from = -10, to = 60)for σ ~ Uniform( 0, 50 ) constrains the σ to have a positive probability from 0 to 50 cm for a height outcome variable

- Example:

- Prior Predictive Distribution

- Priors can be previous posterior distributions, so they can be sampled in order to see the expected distribution, averaged over the priors, which is a distribution of relative plausibilities before seeing the data.

- Example: height ~ 1

sample_mu <- rnorm(1e4, 178, 20) # where mean = 178 and sigma = 20

sample_sigma <- runif(1e4, 0, 50) # bounded from 0 to 50

prior_predictive_distribution <- rnorm(1e4, sample_mu, sample_sigma) # averaging over the priors, PPD

dens(prior_predictive_distribution) # relative plausibilities before seeing dataWhat our parameter priors (mean, sd) say that we expect the height distribution to look like in the wild.

Sampling means and std devs from posterior

- regardless of sample size, a gaussian likelihood * a gaussian prior will always produce a gaussian posterior distribution for the mean

- the std.dev posterior will have a longer right tail. Smaller sample sizes = longer tail

- variances are always positive. Therefore if the estimate of the variance is near zero, There isn’t much uncertainty about how much smaller it can be because its bounded by zero, but there is no bound on the right, so the uncertainty is larger.

- **brms handles these skews fine ** because it’s posterior sampling function samples from Hamiltonian Monte Carlo (HMC) chains and not the multivariate Gaussian distribution. See Ch.8. Also Ch.9 in the brms version for modeling σ using distributional models in case of heterogeneous variances.

- Similar grid approx. code to top of Ch.3. Instead of one parameter, p, it’s the mean and standard deviation.

- Each value (a row number) that’s sampled represents one combination of potential values of the mean and standard deviation and is selected based on the posterior probability density.

- This posterior density is the joint posterior density of the mean and standard deviation

# sample posterior probability but output the row indices and not the values

sample_rows <- sample(1:nrow(posterior), size = 1e4, replace = TRUE, prob = posterior$prob)

# use sampled row indices to sample the grid values for mu and sigma

sample_mu <- posterior$mu[sample_rows]

sample_sigma <- posterior$sigma[sample_rows]

dens(sample_mu) # Often Guassian

HDPI(sample.sigma) # usually right skewed, extent depends on sample size, may want to use HDPI for CIs(See stat.rethinking brms recode bkmk for ggplot graphs and dplyr code)

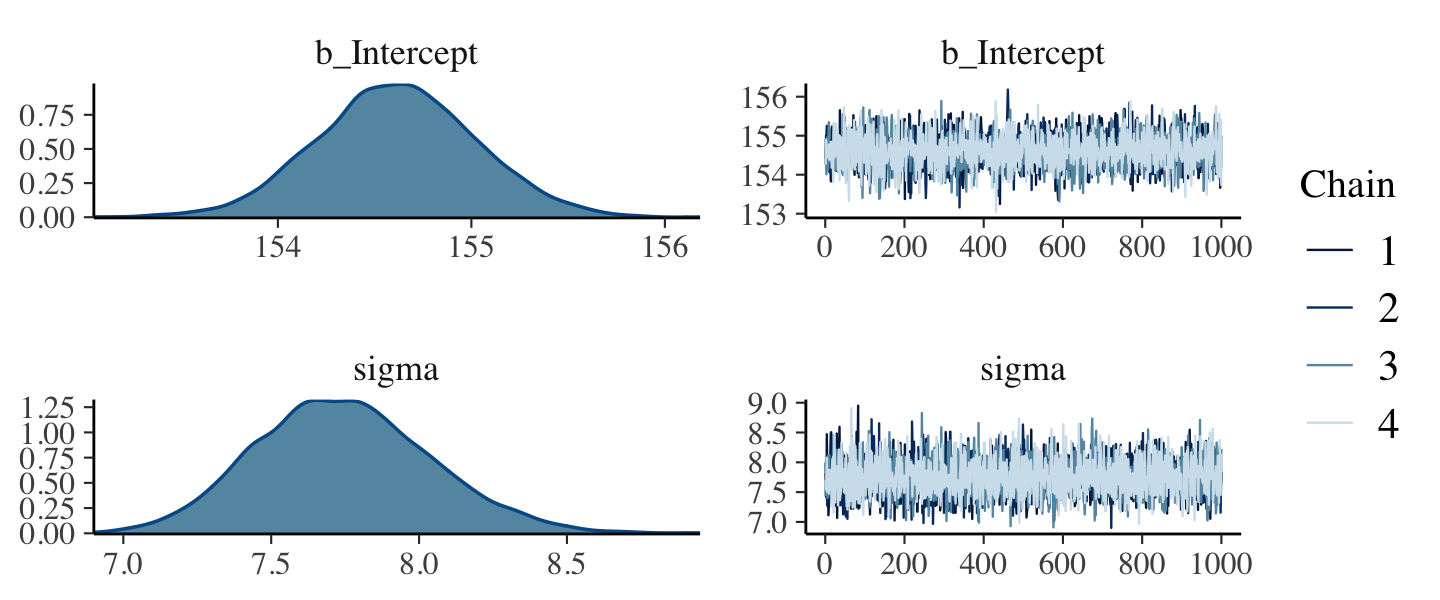

Using quadratic approximation to compute the MAP for the height data

- This is actually HMC instead of quadratic. He uses a MAP function from his package in the book.

- We’re trying to find the maximum a posteriori (MAP) for μ and σ (i.e. the maximum of their posterior probabilities)

# no predictors yet, so just an intercept model

b4.1 <- brm(data = height_dat, family = gaussian,

height ~ 1,

prior = c(prior(normal(178, 20), class = Intercept),

# can use fewer iter and warm-up with a half cauchy prior

# prior(cauchy(0, 1), class = sigma)

prior(uniform(0, 50), class = sigma)),

# iter = 2000, warmup = 1000, chains = 4, cores = 4

iter = 31000, warmup = 30000, chains = 4, cores = 4,

seed = 4, backend = "cmdstanr",

)

summary(b4.1)

posterior_summary(b4.1)

post <- posterior_samples(b4.1)

plot(b4.1)- Only things to pay attention to at this point are the family, formula, and prior arguments, Think the rest of this gets covered in Ch.8.

- flat priors can require a large value in the warm-up arg because of it’s weakness

- summary shows the estimates(means) of mu (intercept) and standard deviation (sigma), standard errors, CIs (percentile) along with the model specifications

- These values are the Gaussian estimates of each parameter’s “marginal” distribution

- The marginal distribution of sigma is the distribution of the residuals

- The marginal distribution for mu means the plausibility of each value of mu, after averaging over the plausibilities of each value of sigma.

- The “averaging over” effect can be seen in the marginal distribution of sigma if you change the the prior for mu to normal(178, 0.1). The posterior estimate for mu will hardly move off the prior, but the estimate for sigma will move quite a bit from before, since the plausibilities for each value of mu changed.

- So the posterior density for sigma gets more squatted and widens with that narrow mu prior. The right tail lengthens as well.

- I think this is an Example of how the marginal distribution of sigma is affected by mu since it has been averaged over the values of mu.

- The “averaging over” effect can be seen in the marginal distribution of sigma if you change the the prior for mu to normal(178, 0.1). The posterior estimate for mu will hardly move off the prior, but the estimate for sigma will move quite a bit from before, since the plausibilities for each value of mu changed.

- These values are the Gaussian estimates of each parameter’s “marginal” distribution

posterior_summaryjust gives the estimates, errors, CIs in a matrix objectposterior_samplesextracts samples from the posterior for each parameter- extracts samples HMC chains

- also outputs the log posterior,

lp__. For details:- https://discourse.mc-stan.org/t/basic-question-what-is-lp-in-posterior-samples-of-a-brms-regression/17567/2

- https://cran.r-project.org/web/packages/rstan/vignettes/rstan.html#the-log-posterior-function-and-gradient

fittedgives the fitted values- newdata + summary = F takes new data and interpolates from the model.

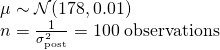

- Strength of priors can be judged by the size of n that it implies using the formula for Gaussian sample std.dev.

- Equivalent to saying you’ve observed 100 heights (1/0.01) that had a mean = 178 cm, sd = 0.01. Considered to be a pretty strong prior.

- Extremely weak prior

- Model

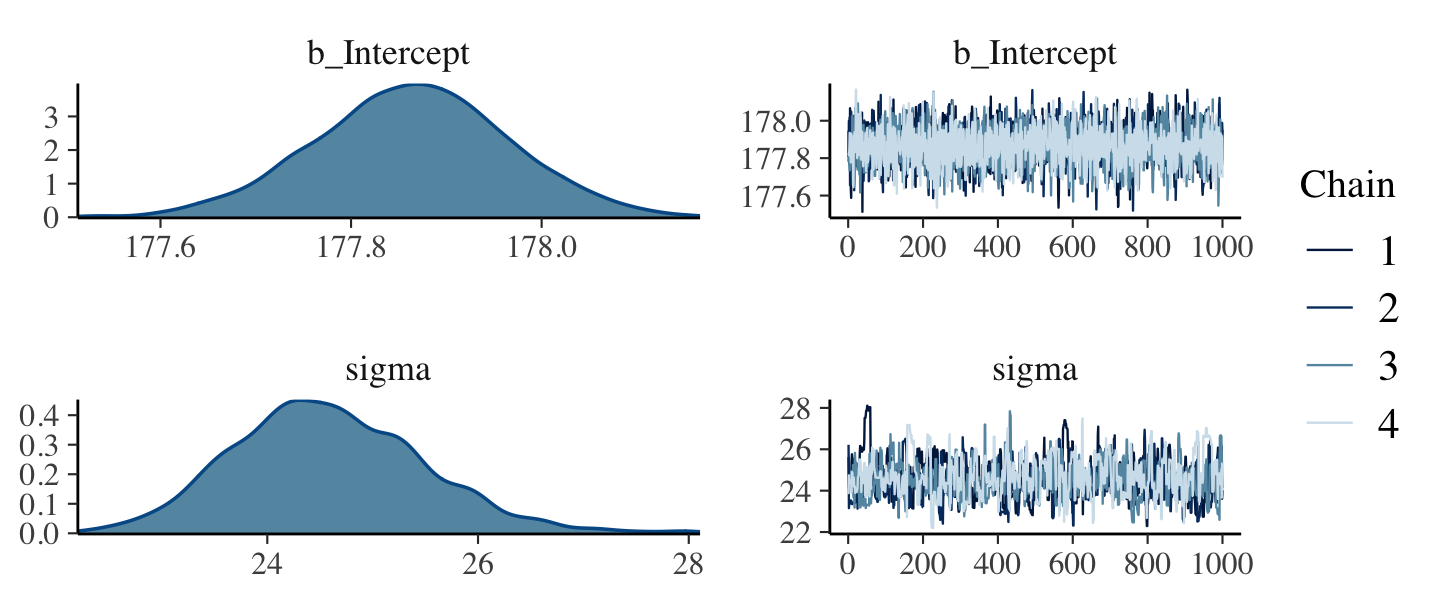

- Example single variable regression where height is the outcome and weight the predictor

- hi ~ Normal(μ, σ)

- μi = α + βxi

- α ~ Normal(178, 100)

- β ~ Normal(0, 10)

- σ ~ Uniform(0, 50)

- hi is the likelihood and ui is the linear model (now deterministic, not a parameter). The rest of the definitions are for priors.

- A Gaussian prior for β with mu = 0 is considered conservative because it will drag the probability mass towards zero, and a β parameter at 0 is equivalent to saying, as a prior, that no relation exists between the predictor and outcome

- In this case, the sigma for this prior is 10, so while it’s conservative, it’s also weak, and therefore it’s pull on β towards zero will be small. The smaller sigma is, the greater the pull of the prior.

- This pull towards 0 is also called shrinkage.

- In this case, the sigma for this prior is 10, so while it’s conservative, it’s also weak, and therefore it’s pull on β towards zero will be small. The smaller sigma is, the greater the pull of the prior.

- Starting values don’t effect the posterior while priors do. (Also see Ch 9 >> Set-up Values)

- inits argument is for starting values in brms

- Either “random” or “0”. If inits is “random” (the default), Stan will randomly generate initial values for parameters.

- If “0”, then all parameters are initialized to be zero.

- “random” randomly selects the starting points from a uniform distribution ranging from -2 to 2

- This option is sometimes useful for certain families, as it happens that default (“random”) inits cause samples to be essentially constant.

- When everything goes well, the MCMC chains will all have traversed from their starting values to sampling probabilistically from the posterior distribution once they have emerged from the warmup phase. Sometimes the chains get stuck around their starting values and continue to linger there, even after you have terminated the warmup period. When this happens, you’ll end up with samples that are still tainted by their starting values and are not yet representative of the posterior distribution.

- setting inits = “0” is worth a try, if chains do not behave well.

- Alternatively, inits can be a list of lists containing the initial values, or a function (or function name) generating initial values. The latter options are mainly implemented for internal testing but are available to users if necessary. If specifying initial values using a list or a function then currently the parameter names must correspond to the names used in the generated Stan code (not the names used in R).

- See this thread which uses a custom function to create starting values for a parameter

- Either “random” or “0”. If inits is “random” (the default), Stan will randomly generate initial values for parameters.

- inits argument is for starting values in brms

- In many cases a weak prior for the intercept is appropriate as it is often uninterpretable. Meaning it should have a wide range given that we can’t intelligibly estimate what range of values it might fall in-between.

- e.g. if the model estimate for the intercept in the height ~ weight model was 113.9. This would mean that the average height for a person with zero weight is 113.9 cm which is nonsense.

- Centering predictors makes the intercept interpretable. The interpretation becomes “when the predictors are at their average value, the expected value of the outcome is the value of the intercept.”

- β and σ estimates aren’t affected by the transformation

- Example single variable regression where height is the outcome and weight the predictor

b4.3 <- brm(data = dat, family = gaussian,

height ~ 1 + weight,

prior = c(prior(normal(178, 100), class = Intercept),

prior(normal(0, 10), class = b),

prior(uniform(0, 50), class = sigma)),

iter = 41000, warmup = 40000, chains = 4, cores = 4,

seed = 4, backend = "cmdstanr",

)

# scatter plot of observed pts with the regression line from the model

# Defined the by the alpha and beta estimate

dat %>%

ggplot(aes(x = weight, y = height)) +

geom_abline(intercept = fixef(b4.3)[1],

slope = fixef(b4.3)[2]) +

geom_point(shape = 1, size = 2, color = "royalblue") +

theme_bw() +

theme(panel.grid = element_blank())The posterior density is a joint density of α (intercept), β, and σ.

Since μ is a function of α and β, it also has a joint density even though it is no longer a parameter (i.e. deterministic and no longer stochastic)

Correlation between parameters

posterior_samples(b4.4) %>%

select(-lp__) %>% # removing log posterior column

cor() %>%

round(digits = 2)- Correlated parameters are potentially a problem for interpretation of those parameters.

- Centering the predictors can reduce or eliminate the correlation between predictor and intercept parameter estimates

- also makes the intercept interpretable (see 2 bullets above)

- Uncertainty

- 2 types of uncertainty (also see end of Ch.3)

- The posterior distribution includes uncertainty in the parameter estimation

- The distribution of simulated outcomes (PI) includes sampling variation (i.e. uncertainty)

- The posterior distribution is a distribution about all possible combinations of α and β with an assigned plausibility for each combination.

- Each combo can fit a line. A plot of these regression lines is an intuitive representation of the uncertainty. The CI for a regression line.

- Interpretation of HDPI when x = x0

- Example: Given a person’s weight = 50kg, what does an 89% HDPI around our model’s height prediction mean?

- “The central 89% of the ways for the model to produce the data place the average height between about 159cm and 160cm (conditional on the model and data), assuming a weight of 50kg.”

- Example: Given a person’s weight = 50kg, what does an 89% HDPI around our model’s height prediction mean?

- 2 types of uncertainty (also see end of Ch.3)

# posterior distribution for x = x0 where x0 = 50 kg

mu_at_50 <- posterior_samples(b4.4) %>%

transmute(mu_at_50 = b_Intercept + b_weight * 50)

# hdmi at 89% and 95% intervals

mean_hdi(mu_at_50[,1], .width = c(.89, .95))

mu_at_50 %>%

ggplot(aes(x = mu_at_50)) +

geom_density(size = 0, fill = "royalblue") +

tidybayes::stat_pointintervalh(aes(y = 0),

point_interval = mode_hdi, .width = .95) +

scale_y_continuous(NULL, breaks = NULL) +

labs(x = expression(mu["height | weight = 50"])) +

theme_classic()- Confidence Interval - repeating the calculation for every x0 will give you the regression line CI

- Example brms regression line CI

# x value (weight) range we want for the CI of the line

weight_seq <- tibble(weight = seq(from = 25, to = 70, by = 1))

# predicted values (height) for each x value

# 95% CIs generated by default

mu_summary <- fitted(b4.3, newdata = weight_seq) %>%

as_tibble() %>%

# let's tack on the `weight` values from `weight_seq`

bind_cols(weight_seq)

# regression line against data with CI shading

dat %>%

ggplot(aes(x = weight, y = height)) +

geom_smooth(data = mu_summary,

aes(y = Estimate, ymin = Q2.5, ymax = Q97.5),

stat = "identity",

fill = "grey70", color = "black", alpha = 1, size = 1/2) +

geom_point(color = "navyblue", shape = 1, size = 1.5, alpha = 2/3) +

coord_cartesian(xlim = range(d2$weight)) +

theme(text = element_text(family = "Times"),

panel.grid = element_blank())A narrow CI doesn’t necessarily indicate an accurate model. If the model assumption of a linear relationship is wrong, then the model is wrong regardless of the narrowness of the CI. The regression line represents the most plausible line and the CI is the bounds of that plausibility.

Prediction Interval

- Procedure

- Generate the range/number of weights (predictor variable) you want a PI for

- Sample σ, α, and β from the posterior distribution the same number of times as the number of weights in your range

- simulate the heights from a gaussian distribution (think rnorm) using the weights (along with α and β) in a linear expression for mean argument and the standard deviations from the posterior (see details in book)

- Example PI and CI around regression line

- Procedure

# 95% PIs generated by default

pred_height <- predict(b4.3,

newdata = weight_seq) %>%

as_tibble() %>%

bind_cols(weight_seq)

# includes regression line, CI, and PI

dat %>% ggplot(aes(x = weight)) +

# PIs

geom_ribbon(data = pred_height, aes(ymin = Q2.5,

ymax = Q97.5), fill = "grey83") +

# CIs

geom_smooth(data = mu_summary, aes(y = Estimate, ymin = Q2.5, ymax = Q97.5),